I will begin by identifying, chronologically, several events and issues that took place over the course of the project, their causes and solutions (mitigate negative events and facilitate positive events):

Illness (2 colds and 1 diabetic complication)(-)

Afflicted at both the beginning and end of flu season (has adverse effects on insulin absorption)

Preventative remedies and more efficient management/monitoring

Recording session with David and Adam (+)

Time efficient, positive and productive session required for dialogue overdubs

Maintaining a friendly, enjoyable yet productive atmosphere is key. Having the director present meant the vocal takes were exactly to his liking (no back and forth/additional recording sessions required)

Updates with Andrew Hill (+)

Andrew often validated my choices, steered me onto different avenues and always had great suggestions/solutions to attempt/implement while moving forward.

The delivery of a rough/fine cut (-)

This happened ridiculously late in the schedule and didn’t allow for much breathing room when it came to modifications,updates and lock offs. There were several modification updates which, even when seemingly small, had a large effect particularly on integrated LUFS metering.

Adherence to the schedule and being more assertive and insistent on deadlines that leave appropriate working times is essential and a lesson well learned, be it, the hard way this time.

Mix review with Andrew Hill (+)

Was reminded of one of the last stages of sound production assets and that is the LUFS metering. Factoring this stage into production would probably have allowed for a more efficient mix review.

Attempts at improved Black Dog sibilance improvement (-)

In an attempt to close the gap between “coolness vs audibility” i made several attempts at processing the recorded dialogue in various ways to get the most out it. Including various EQ attempts, toning down plug-ins effects, recorded plosives to accentuate the existing dialogue. A recommendation of EQing an approx. 80Hz boost rather than an audio clip pitch shift were taken on board and tested but desired results were not reached in a quick enough timeframe to warrant usage.

Re-recording of black dog vocals for sibilance improvements (+)

The final solution was to re-record the dialogue with exaggerated enunciation and pitch shift to lesser factors than previous.I time stretched the audio back in line with dialogue placement for the rough cut, as per director’s request.

Fine cut LUFS (-)

Tuning the LUFS metering was a fine and exhaustive process and trying to level out before the final lock off proved more of a hindrance than a help. Recognising the importance and time requirements for this section of post production is essential in effective scheduling and time management.

My understanding of workflow was verified by the delivery timing of full-scale assets. This occurred at the latter portion of the project process Google drive facilitated file transfer, review and updated progress. I feel that although both David and I would have had schedules these were not adhered to or updated throughout the project. There were a few necessary back and forth moments, particularly regarding dialogue segments that required additional length for dialogue animation and for facial expression alterations.

On reflection my contributions to a productive atmosphere, towards the middle of the project, were lacking. Aside from the demonstration analogue synthesised music score, to confirm pace and gauge aversion for synthesis, my contribution was minimal until the desired/required full length rough cuts were made available. This was rationalised with an equal mix of time management considerations and issues of illness. David took an active role in elements of audio constructing, particularly the war ‘scene’ at the beginning of the animation. Having provided the various samples for David to select from, I was able to recreate his desired war scape and build upon it. The main issue upon final pre-showcase review was of Black Dog’s dialogue clarity. Andy pointed to an overshift in pitch shift and that a boost around 80 Hz in the original or less pitch shifted samples could work. I made some attempts but went on with my original plan of overdubbing over enunciated vocal takes and using a less shifted pitch shifted clip and a subtle but large pitch shifted clip low in the mix to retain the sub/bass feel of the dialogue.

During all Recording and mixing sessions, in studios, I included and consulted with David in all finalising decisions before moving on through required tasks. I believe that the productive atmosphere created allowed David to speak his mind on any readings which didn’t capture the feeling of what he was after.It was great to have the director/creator on board as he was able to directly communicate HOW he wanted his dialogue to be read and I was able to direct Adam on how to deliver said dialogue into the microphone.During my final session, David was unhappy with a scream and I was able to immediately facilitate David’s scream overdubs.

The intent of Dark Imaginings is to continue discourse about depression particularly related to military casualties. The included act of suicide adds to the dark and serious nature of the animation. The first section of score was a set of polyphonic rising chords on tremolo strings using a blur of major/minor tonalities. My main theme consisted of || :V7 V7 | i i | V7 V7 | i VI7 :|| and used the theremin style synth lead that i created for the demo to add to the otherworldly feel of the escape sequence.

To reflect the intensity and action of the internal thought sequence I used An element i kept from the original synthesised demo. This was the tempo changes pre/post the internal dream sequence. Initially represented in arpeggiation the quarter note drive of the strings leading into and an accidental ostinato (created during midi sequencing) represented the quickening and slowing of the heart, avoiding the conventional thump-thump.of sound design. There was clipping in the final section of score so I replaced the theremin synth lead with a heavily reverberant piano lead. This was to evoke the reflective, affirmative hope filled conclusion. COnsiderations were taken to reflect the yin yang aspects of dog and rabbit by having pitched down bassier duplicates for black dog dialogue and blended higher pitch shifted duplicates for Jack rabbit character. The sound design remained as realistic as possible for all diagetic sounds. In our final session, David requested heavy breathing during the initial scene which we overdubbed on the spot.

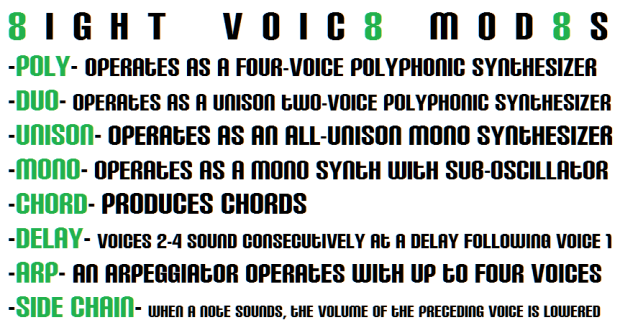

My main influence for synthesized score for dark imaginative projects has been Kyle Dixon and Michael Stein’s STRANGER THINGS soundtrack, which draws comparisons to film composer John Carpenter. From a production standpoint, it’s obvious that Dixon and Stein are not only composers, but also craftsmen with a deep enough knowledge of analog synthesizers, like the Prophet 6 and ARP 2600, to be able to manipulate them into producing the exact sentiment that a given scene calls for. From Feel-good warmth and melancholia to the darker, more mysterious nature of the story. (N.Yoo, 2016) One of the director’s guidelines given was that they didn’t want the score to be ‘too synth’. “There’s a part of a synthesizer called “resonance” and it makes everything sound kinda (makes laser noises) laser-y. As long as we’re not doing too much of that, I think we’re in good shape. I think that’s what it means, but you never know” – Dixon (J.Marez, 2016) There was concern that a synthesised score was leaning towards a more video game sound, so I reverted to string scoring instead and postponed an in-depth exploration of analogue synthesis, for now…

REFERENCES

Yoo, Noah (16 Aug 2016) Inside the Spellbinding Sound of Stranger Things

Retrieved from: http://pitchfork.com/thepitch/1266-inside-the-spellbinding-sound-of-stranger-things/

Maerz, Jennifer (24 Jul 2016) Obsessed with Stranger Things?

Retrievedfrom: http://www.salon.com/2016/07/23/obsessed_with_stranger_things_meet_the_band_behind_the_shows_spine_chilling_theme_and_synth_score/

Demon Seed (1977) was directed by Donald Cammell and stars Julie Christie as the wife of a scientist (Fritz Weaver) who has invented the Proteus IV supercomputer. However, Proteus soon develops the need to procreate—and uses Christie as the means to that end, trapping her in her house and terrorizing her. Jerry Fielding’s avant garde score was a high-water mark in the composer’s experimentation, featuring eerie suspense and violence as Proteus and Christie engage in a battle of wills.

Demon Seed (1977) was directed by Donald Cammell and stars Julie Christie as the wife of a scientist (Fritz Weaver) who has invented the Proteus IV supercomputer. However, Proteus soon develops the need to procreate—and uses Christie as the means to that end, trapping her in her house and terrorizing her. Jerry Fielding’s avant garde score was a high-water mark in the composer’s experimentation, featuring eerie suspense and violence as Proteus and Christie engage in a battle of wills. The development of musique concrète was facilitated by the emergence of new music technology in post-war Europe. Access to microphones, phonographs, and later magnetic tape recorders (created in 1939

The development of musique concrète was facilitated by the emergence of new music technology in post-war Europe. Access to microphones, phonographs, and later magnetic tape recorders (created in 1939 The score and soundscapes during the many “dream” sequences reflect their uneasy and otherworldly nature. The foley is always disconcerting and appropriate especially the electronic hum mixed with mechanical clicks for Proteus’s “arm chair” (a wheelchair with a mechanical arm attached) and a large array of tones and weird bubbly noises for Proteus’s bizarre geometric manifestation.

The score and soundscapes during the many “dream” sequences reflect their uneasy and otherworldly nature. The foley is always disconcerting and appropriate especially the electronic hum mixed with mechanical clicks for Proteus’s “arm chair” (a wheelchair with a mechanical arm attached) and a large array of tones and weird bubbly noises for Proteus’s bizarre geometric manifestation.

As an introductory aide to analogue synthesis, I find the Minilogue to be exceptional. The oscillator adds a visual element to synthesis exploration/manipulation. This is just the tip of the Minilogue ice burg, there are a range of features and functions (eg. 16 step sequencer) that I am yet to delve into, but I am thoroughly looking forward to the experience, process and learning this will facilitate.

As an introductory aide to analogue synthesis, I find the Minilogue to be exceptional. The oscillator adds a visual element to synthesis exploration/manipulation. This is just the tip of the Minilogue ice burg, there are a range of features and functions (eg. 16 step sequencer) that I am yet to delve into, but I am thoroughly looking forward to the experience, process and learning this will facilitate.